AI Legal Tools: How to Evaluate Accuracy and Risk

AI is now embedded in everyday litigation work, from summarizing medical records to drafting demand letters and building deposition outlines. The upside is real, faster throughput and better organization of sprawling files. The downside is also real, because a single silent error can change settlement posture, impair credibility, or create confidentiality exposure.

Evaluating AI legal tools is therefore less about “does it sound smart?” and more about answering two questions:

- How accurate is it for the specific tasks you will use it for?

- What legal, ethical, security, and operational risks does it introduce, and how do you control them?

This guide gives a practical, litigation-focused framework you can use to evaluate accuracy and risk before you roll a tool out to your team.

Start with use case scoping, because risk is task-dependent

Accuracy expectations and risk controls should vary by what the tool is doing.

A tool used for first-pass triage of an intake packet is not the same as a tool that drafts a demand letter that will go to an adjuster, or generates a deposition outline that shapes questioning strategy. The more the output will be relied upon, the higher the standard you need for verification, auditability, and governance.

A helpful way to scope is to define:

- Output type: summary, extraction, chronology, draft letter, outline, analytics.

- Source types: PDFs, scanned records, EHR exports, claims docs, discovery.

- Reliance level: internal-only, attorney review required, client-facing, opposing-party-facing, court-facing.

- Time sensitivity: settlement demand deadlines, discovery cutoffs, trial prep.

Once you know the reliance level, you can right-size testing and guardrails.

Accuracy: what “good” looks like for litigation workflows

Accuracy in legal AI is not one number. You want to separate at least four dimensions:

- Fidelity to the record: statements are supported by the uploaded documents.

- Completeness: the tool captures the important facts, not just the obvious ones.

- Precision: it does not invent details, dates, or medical findings.

- Usability: the format is genuinely litigation-ready, with clear organization and traceable support.

Build a small, representative benchmark set

Before a firm-wide rollout, build a benchmark set of matters that represent your real work.

Aim for variety:

- A clean case file with well-labeled PDFs.

- A messy file with duplicates, partial scans, and inconsistent naming.

- A medically complex file (multi-provider, long timeline).

- A causation-disputed file.

- A liability-disputed file.

Keep the set small enough to review carefully, but large enough to surface failures. In practice, many firms start with 10 to 30 matters for an initial evaluation, then expand.

Decide what counts as an error, and how severe it is

Not every flaw is equal. A formatting issue is annoying, a wrong diagnosis is catastrophic.

Define error severity tiers before you test so your team can grade consistently:

- Critical: changes legal meaning, damages evaluation, causation, liability, or could mislead a recipient.

- Major: materially incomplete or misleading, but likely caught by normal review.

- Minor: stylistic, organizational, or low-impact omissions.

This is also where you decide what your “acceptable” failure rate is for each task type. For example, you might accept more minor errors in a first-pass chronology than in a demand letter draft.

Measure citation and source traceability, not just readability

For litigation outputs, the most important question is often: Can I quickly verify this against the record?

When evaluating tools, look for whether the output supports efficient verification:

- Are key assertions paired with pinpoint references (document name, page, Bates, or excerpt)?

- Can a reviewer jump to the supporting section quickly?

- Does the tool distinguish between “directly stated in the record” versus “inferred”?

If a system produces polished prose without traceability, the attorney review burden can stay high, or even increase.

Use a scoring rubric your team can apply consistently

A simple rubric makes accuracy evaluation repeatable across matters and reviewers.

| Evaluation area | What to test | What “passable” looks like | Typical failure modes |

|---|---|---|---|

| Record fidelity | Random-sample factual claims and verify against source | Claims match documents, no invented facts | Hallucinated dates, wrong provider, swapped sides |

| Completeness | Compare to your known “must include” facts list | Captures major injuries, key treatments, liability facts | Misses prior injuries, gaps in treatment, comparative fault facts |

| Chronology integrity | Timeline order, date normalization | Dates correct, sequencing consistent | Off-by-one dates, merging separate events |

| Entity accuracy | Parties, providers, facilities, adjusters | Names consistent, role correct | Misattribution (wrong doctor, wrong insurer) |

| Legal drafting reliability | Demands, outlines, letters, trial materials | Draft is structurally usable and fact-grounded | Overconfident legal assertions, incorrect venue-specific language |

| Verification speed | Time for reviewer to confirm key points | Faster than manual, less toggling | Reviewer spends more time hunting sources |

If you want to go one level deeper, track two practical metrics during pilot:

- Attorney review time per output type (before vs after).

- Critical error rate per output type.

Those two numbers often predict ROI and risk better than generic model claims.

Stress-testing: find failure modes before they find you

A strong evaluation includes deliberate “break it” testing.

Test OCR and scan quality scenarios

Many legal documents are not born-digital. If your matters include scanned records, test:

- Skewed or low-resolution scans.

- Handwritten notes.

- Multi-column forms.

- Fax artifacts.

If the system’s OCR layer fails, everything downstream can look confident while being wrong.

Test ambiguity and contradiction

Real case files contain inconsistencies.

Create test prompts where:

- Two records conflict on date of injury.

- A provider note contains a differential diagnosis that later changes.

- Billing codes do not match narrative notes.

A safer tool will surface uncertainty or request clarification, not “choose” a fact silently.

Test out-of-distribution documents

Upload something outside the tool’s happy path:

- A record set that includes pediatric notes in an adult case.

- Unusual specialties.

- Non-standard insurer correspondence.

You are checking whether the tool degrades gracefully (it should say what it cannot do), or produces confident nonsense.

Risk: the core legal and operational issues to evaluate

Accuracy is only half the decision. The other half is whether the tool fits your ethical duties, confidentiality obligations, and litigation workflow realities.

Professional responsibility and supervision

In the US, competence includes understanding the benefits and risks of relevant technology. Comment 8 to ABA Model Rule 1.1 is often cited for this principle, and it underscores why firms need a real evaluation process, not informal adoption.

You also need to ensure proper supervision of nonlawyers and vendors (commonly discussed in the context of ABA Model Rules 5.1 and 5.3), and protection of confidential information (ABA Model Rule 1.6).

A practical takeaway is that AI outputs should be treated like work from a new, fast junior, helpful, but not self-validating.

Confidentiality, privilege, and data handling

For any AI legal tool, you should understand:

- What data is stored (uploaded files, extracted text, outputs, metadata).

- How long it is retained, and whether you can delete it.

- Whether your data is used to train models (opt-in vs opt-out, and what “training” means).

- Who can access data (your team, vendor staff, subprocessors).

- Encryption (in transit and at rest) and access controls.

Do not accept vague answers like “we take security seriously.” Ask for specific documentation, and have counsel review vendor terms where appropriate.

Auditability and defensibility

In litigation, you need to be able to explain what you did.

Even if the AI tool is not discoverable, your process may be scrutinized indirectly through output quality, attorney sign-off, or internal QA.

Useful questions:

- Can you reproduce an output later, or at least understand what documents were used?

- Does the tool keep an activity history showing uploads and output generation?

- Can you export outputs in a way that fits your file management practices?

Bias and outcome risk

Bias in legal contexts is not only about demographics, it can also be about systematic skew in what gets emphasized.

Examples:

- A medical summary that consistently underplays subjective pain reporting.

- A demand draft that overstates certainty in disputed causation.

- An “insights” feature that nudges toward settlement ranges without transparent assumptions.

This is where human review is not optional. You want tools that help you reason, not tools that silently decide.

Hallucinations and “false precision”

A high-risk pattern in AI is false precision, the output includes exact dates, exact dollar amounts, or definitive medical conclusions that are not explicitly supported.

Your evaluation should include a rule like: If the tool cannot cite support, the claim is treated as unverified.

A practical risk checklist you can use in vendor evaluation

| Risk category | What to ask | What you want to hear (in substance) |

|---|---|---|

| Data use | Is my data used for model training? | Clear policy, ideally no training by default, with explicit controls |

| Retention and deletion | How long is data stored, and can we delete it? | Defined retention, admin deletion, support for matter-level cleanup |

| Access and permissions | Can we limit who sees what within the firm? | Role-based access, separation by workspace or matter |

| Incident response | How do you handle security incidents? | Written process, notification commitments |

| Output traceability | Can outputs link back to sources? | Citations, excerpts, or doc references that speed verification |

| QA and updates | How do you evaluate accuracy over time? | Ongoing monitoring, documented changes, predictable versioning |

If a vendor cannot answer these clearly, it is a signal that you will be absorbing risk you cannot easily quantify.

Implementation controls that reduce both accuracy risk and malpractice risk

Even great tools fail without process.

Put review rules in writing

Define when attorney review is required and what “review” means.

For example:

- All demand letters require attorney verification of medical specials, key injury claims, and liability summary.

- All deposition outlines require cross-checking of names, dates, and prior statements.

- Any uncited factual assertion must be verified or removed.

This is not bureaucracy, it is how you keep speed without trading away reliability.

Use matter-level checklists for common outputs

For recurring outputs (medical summaries, demands, deposition outlines), create a “must confirm” checklist tailored to your practice.

Keep it short and high impact, for example:

- Date of loss and mechanism of injury.

- Prior similar complaints or injuries.

- Gaps in treatment.

- Imaging results and key impressions.

- Itemized specials and sources.

Control who can generate external-facing drafts

Many firms limit who can generate certain outputs or who can finalize them. Role-based permissions can be a meaningful guardrail, especially in a team collaboration workspace.

This becomes even more important when you collaborate with outside vendors (medical records retrieval, experts, investigators). Streamlining onboarding is good, but it should not expand access beyond what is necessary. For agencies and service providers that need secure, permissioned access setup through a single branded link, a tool like secure one-link client onboarding can reduce manual access errors while keeping onboarding structured.

A note on “instant analytics” and settlement insights

Some litigation AI platforms offer case analytics or settlement-focused insights. Treat these as decision support, not decision engines.

When evaluating any analytic output, ask:

- What data is it based on (your uploaded docs only, public data, blended datasets)?

- What assumptions are embedded?

- How does it handle missing information?

- Can you see why it produced a recommendation?

If you cannot interrogate the “why,” you should avoid relying on the “what,” especially in high-stakes negotiation.

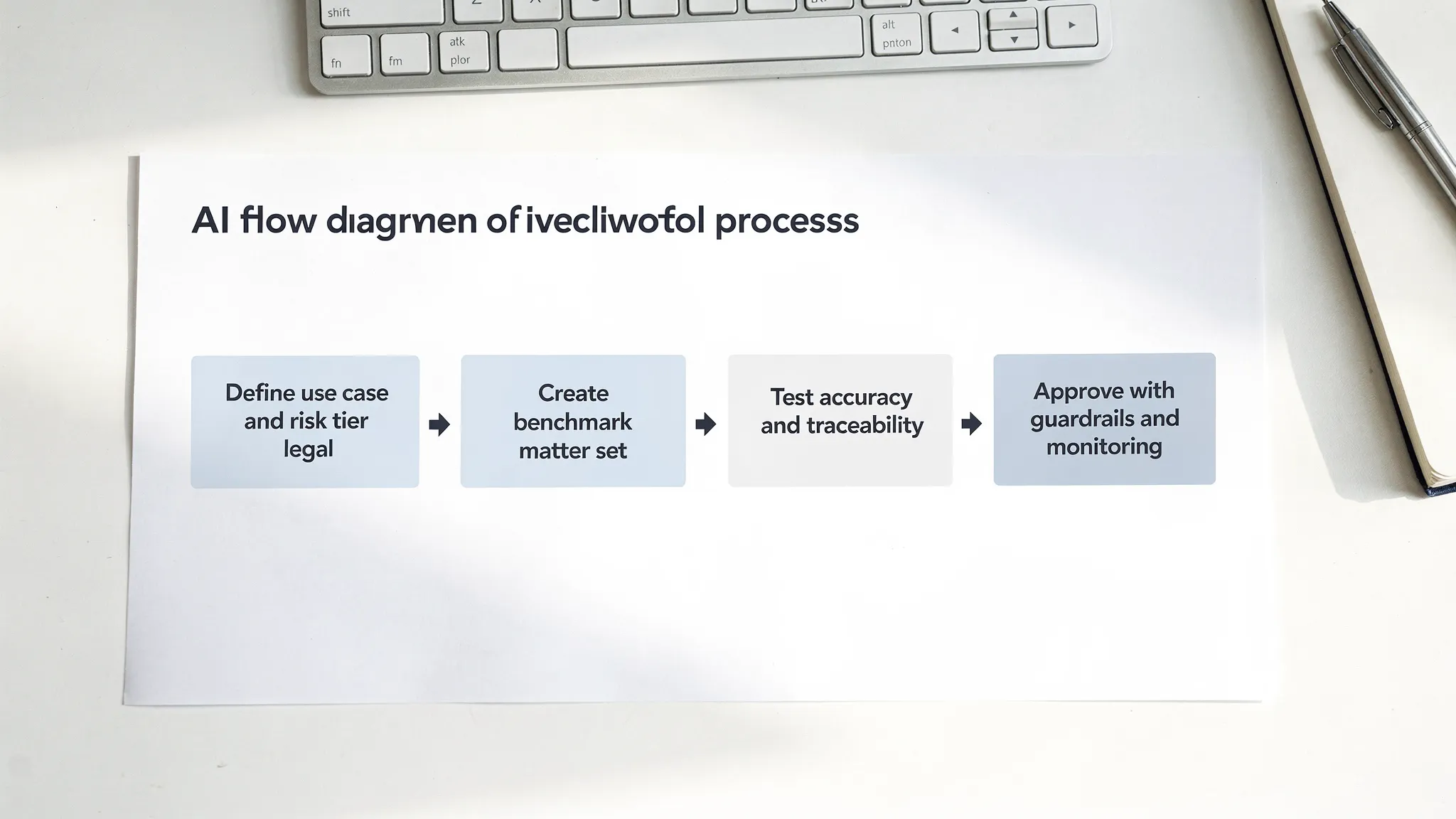

A practical evaluation workflow (30 to 60 days)

The goal is to generate evidence you can rely on, not vibes.

Week 1: Scope and baseline

Pick two to three output types you care about most (for many plaintiff firms, demand letters and medical summaries are high leverage). Measure how long these take today, and what error patterns show up in manual work.

Weeks 2 to 4: Pilot with benchmark set

Run the benchmark matters through the tool. Have two reviewers grade a subset to reduce individual bias. Track critical errors and review time.

Weeks 5 to 6: Operational fit and governance

Confirm how the tool fits your workflow:

- Who uploads documents?

- Where do outputs live?

- How do you collaborate and version drafts?

- What is the deletion process at close of matter?

Document the policies you will enforce, even if they are short.

Ongoing: Monitor drift

Accuracy can change as models update and as your document mix shifts.

A lightweight monitoring plan can include:

- Monthly random sampling of outputs for citation checks.

- A channel for staff to report tool failures.

- Periodic re-testing of benchmark matters.

Where TrialBase AI fits in this evaluation mindset

TrialBase AI positions itself as intelligent litigation support from intake to verdict, turning uploaded documents into litigation-ready outputs like demand letters, medical summaries, and deposition outlines in minutes.

If those are the workflows you want to accelerate, the evaluation approach above maps directly:

- Test medical summaries for record fidelity, completeness, and verification speed.

- Test demand letter drafts for factual precision and source traceability.

- Test deposition outlines for entity accuracy and chronology integrity.

You can learn more about the platform at TrialBase AI, then apply the same benchmark, scoring, and governance process you would use for any AI legal tool. That combination, capable software plus disciplined evaluation, is what produces real time savings without increasing avoidable risk.

Key takeaways

AI legal tools can be transformative, but only if you evaluate them the way litigators evaluate anything else that can change outcomes: with defined standards, adversarial testing, and clear supervision.

Prioritize tools and workflows that:

- Make it easy to verify claims against the record.

- Fail transparently when the record is ambiguous.

- Support confidentiality, access control, and deletion expectations.

- Reduce attorney review time without increasing critical error risk.

If you do that work up front, you get the upside of speed and consistency while keeping your professional obligations and case strategy protected.

.png)